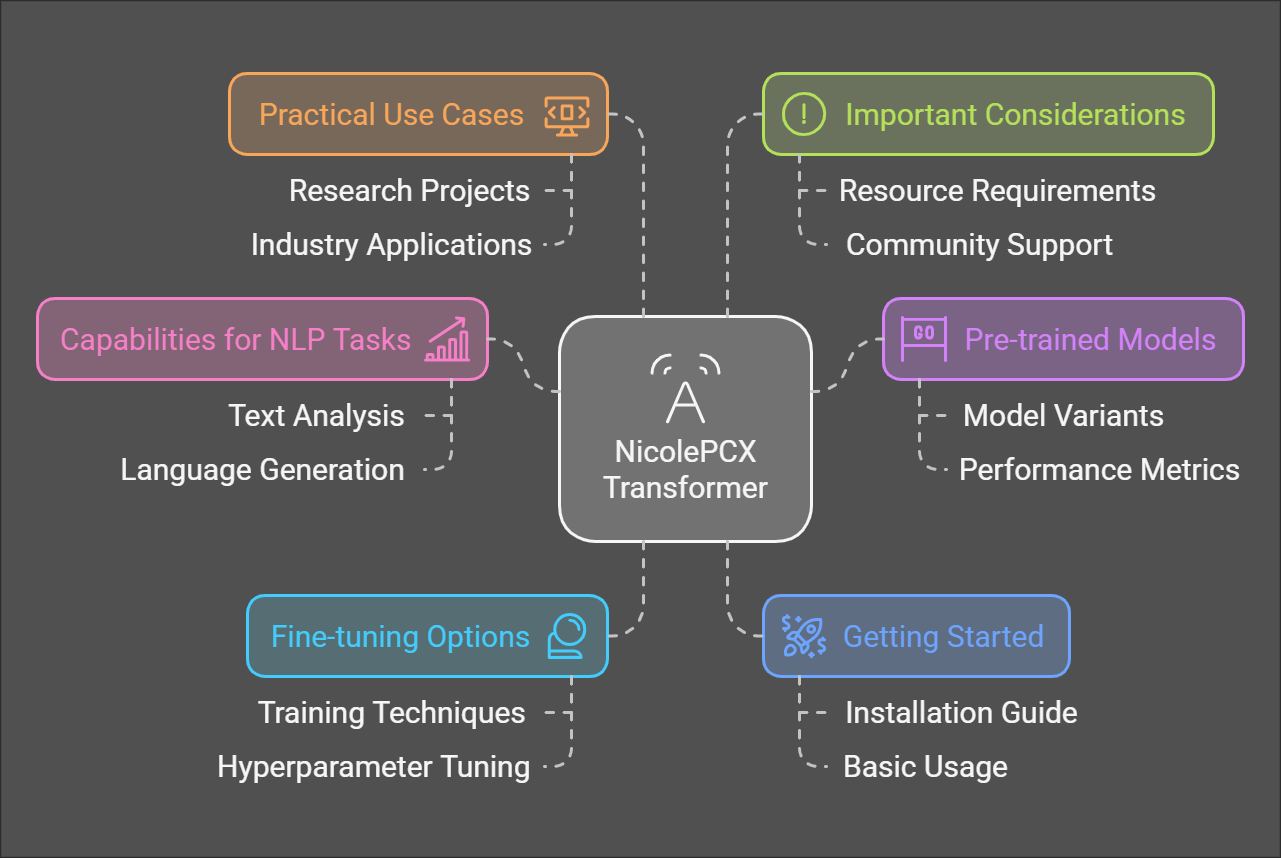

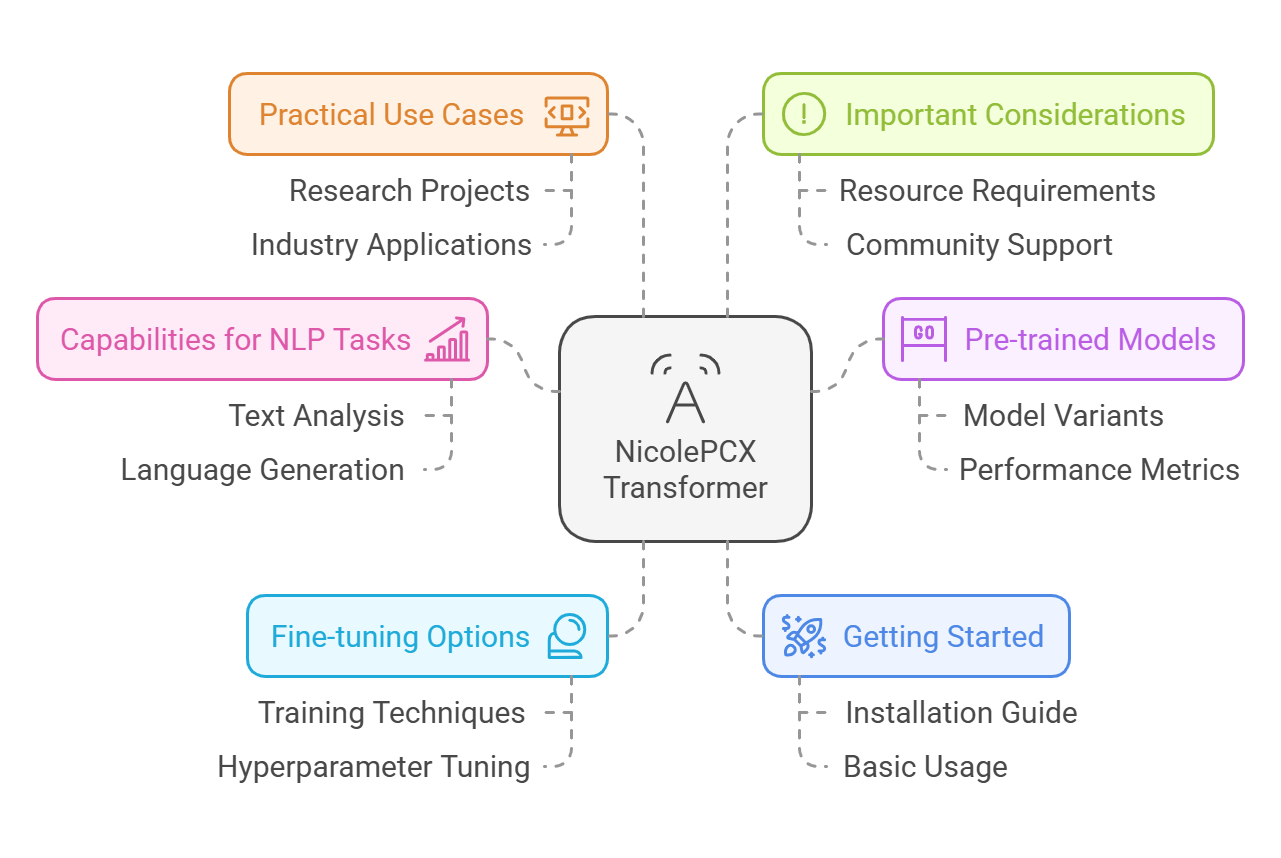

Learn everything about the NicolePCX Transformer on GitHub. Explore its capabilities for NLP tasks, pre-trained models, fine-tuning options, and more in this definitive guide. Get started today!

GitHub has become a central hub for developers across the globe, enabling them to share, collaborate, and build upon each other’s projects. Among the vast sea of open-source repositories, the NicolePCX Transformer stands out as a highly effective tool that has gained popularity for its ability to improve machine learning workflows. Whether you’re an AI researcher, a data scientist, or just someone curious about machine learning, the NicolePCX Transformer repository on GitHub is a must-explore resource.

In this article, we’ll delve into what the NicolePCX Transformer is, how it functions, and why it has become a game-changer in the realm of machine learning. We will also explore how to get started with the repository, provide practical use cases, and highlight some important considerations when working with this project.

What is NicolePCX Transformer?

The NicolePCX Transformer is a machine learning model designed to facilitate high-level natural language processing (NLP) tasks. It is part of a growing suite of transformer-based models that are revolutionizing the field of AI. The transformer architecture, introduced in 2017 by Vaswani et al., has since been adopted widely in NLP due to its ability to handle long-range dependencies in text data effectively.

The NicolePCX Transformer repository on GitHub provides a set of tools, pre-trained models, and scripts that enable developers to use transformer-based techniques to perform various tasks such as:

- Text classification

- Text generation

- Named entity recognition (NER)

- Language translation

- Sentiment analysis

Developed by the contributor NicolePCX, this GitHub repository offers users an optimized, user-friendly version of the transformer architecture that can be easily integrated into both academic research and real-world applications.

Key Features of the NicolePCX Transformer

- Pre-trained Models

One of the standout features of the NicolePCX Transformer is the availability of pre-trained models. This means that developers can leverage these models for tasks without needing to train them from scratch. Pre-trained models save time and computational resources, making it easy to get started with machine learning. - Fine-Tuning Capabilities

While pre-trained models offer great convenience, the NicolePCX Transformer also allows users to fine-tune the models on their specific datasets. This capability ensures that the model can be customized to solve specific problems, improving performance in niche use cases. - Extensive Documentation

The repository is known for its comprehensive documentation, making it easy for both beginners and advanced users to get up to speed. Whether you’re new to transformers or a seasoned AI practitioner, the clear instructions and well-organized structure make it easy to understand and implement. - Integration with Other Frameworks

The NicolePCX Transformer is compatible with popular machine learning frameworks like TensorFlow and PyTorch, allowing users to seamlessly integrate it into their existing workflows. This versatility means that the repository can fit well into various types of projects. - State-of-the-Art Performance

The transformer models in this repository are optimized for performance. Whether you’re working with large datasets or needing high-speed processing, the NicolePCX Transformer is built to deliver efficient results.

Read More:

How Does the NicolePCX Transformer Work?

The NicolePCX Transformer builds on the foundational concepts of transformer models, which are built around the concept of self-attention. Unlike traditional sequence models like RNNs (recurrent neural networks), transformers use attention mechanisms to weigh the importance of different words in a sequence, regardless of their position.

This architecture is particularly powerful for NLP tasks, as it can process and understand the relationships between words that might be far apart in the text. By leveraging multi-head attention mechanisms, transformers can create more context-aware representations of text.

Steps to Get Started with NicolePCX Transformer

To get started with the NicolePCX Transformer, follow these simple steps:

1.Clone the Repository

First, you’ll need to clone the NicolePCX Transformer repository from GitHub. This will allow you to download the code and access the pre-trained models. Use the following command in your terminal:

git clone https://github.com/NicolePCX/transformer.git

2.Install Dependencies

Once you have cloned the repository, you will need to install the necessary dependencies, such as PyTorch or TensorFlow, depending on the framework you choose. You can usually find these in the repository’s requirements.txt file:

pip install -r requirements.txt

3.Load a Pre-trained Model

After setting up the environment, you can load a pre-trained model to start using the transformer for NLP tasks. Example code for loading a model might look like this:

from nicolepcx_transformer import TransformerModel

model = TransformerModel.load_pretrained("model_name")

4.Fine-Tuning the Model

If you want to fine-tune the pre-trained model for your specific task, you can do so by using the repository’s provided functions. Fine-tuning typically involves training the model on your dataset for a few epochs:

model.fine_tune(train_data, epochs=5)

5.Perform NLP Tasks

Once the model is ready, you can use it to perform various NLP tasks, such as text classification, language translation, or sentiment analysis. The repository provides scripts for running these tasks directly.

Use Cases for the NicolePCX Transformer

The NicolePCX Transformer is versatile and can be applied to a wide range of NLP applications, including:

- Text Classification: Categorizing text data into predefined labels (e.g., spam detection, sentiment analysis).

- Named Entity Recognition (NER): Identifying entities such as names, dates, and locations in a text.

- Text Generation: Generating coherent text based on a given prompt, useful in chatbots and creative writing.

- Machine Translation: Translating text between different languages, utilizing the model’s capability to handle multiple languages.

FAQs

1. What is the NicolePCX Transformer?

The NicolePCX Transformer is a GitHub repository offering a transformer-based model that can be used for various natural language processing tasks, such as text classification, sentiment analysis, and more.

2. Is it necessary to train the model from scratch?

No, the repository provides pre-trained models that can be used directly. Additionally, you can fine-tune them on your own data for more specialized tasks.

3. What frameworks are compatible with the NicolePCX Transformer?

The NicolePCX Transformer is compatible with both TensorFlow and PyTorch, making it flexible for different types of machine learning workflows.

4. Can the model be used for tasks other than text classification?

Yes, the model can be used for various NLP tasks such as named entity recognition (NER), text generation, and language translation.

5. How can I get started with the NicolePCX Transformer?

To get started, clone the repository from GitHub, install the dependencies, load a pre-trained model, and begin applying it to your desired NLP tasks.