Discover the full potential of FollowEval GitHub, an advanced benchmark for evaluating instruction-following capabilities in large language models (LLMs). Learn its features, setup, use cases, and contribution process. Includes external links and FAQs.

Introduction to FollowEval GitHub

FollowEval GitHub is a powerful open-source benchmark designed to evaluate the instruction-following capabilities of large language models (LLMs). As artificial intelligence systems evolve, the ability of LLMs to comprehend and execute complex instructions has become a crucial metric for performance evaluation.

FollowEval GitHub serves as a central tool for developers and researchers to assess and improve these models, ensuring they meet high standards in terms of accuracy, responsiveness, and reliability.

What is FollowEval?

FollowEval is a multi-dimensional evaluation framework designed to assess the instruction-following capabilities of artificial intelligence (AI) systems. As AI continues to evolve and integrate into applications such as virtual assistants, chatbots, and automation tools, ensuring that these systems can accurately interpret and execute instructions has become increasingly important. FollowEval provides a structured approach to test, analyze, and improve AI performance by simulating real-world tasks and challenges.

This framework is particularly valuable for developers and researchers focused on creating large language models (LLMs) that can understand complex queries, follow rules, and provide precise responses. By offering a variety of test cases and metrics, FollowEval helps identify gaps in performance and areas where AI models may need further refinement.

Key Evaluation Areas in FollowEval

1. String Manipulation:

AI systems often handle text processing tasks such as extracting information, transforming formats, and organizing data. FollowEval tests whether models can handle textual data efficiently and produce results that adhere to specific input-output formats.

2. Commonsense Reasoning:

Real-world applications require AI to demonstrate common sense by applying logic to everyday scenarios. FollowEval evaluates whether the AI can process implicit knowledge, make assumptions, and generate outputs based on contextual understanding.

3. Logical Reasoning:

AI models must be able to solve problems logically using structured steps. FollowEval assesses how well systems handle tasks like pattern recognition, decision trees, and deductive reasoning, ensuring the model follows a coherent thought process.

4. Spatial Reasoning:

Spatial awareness is essential in tasks requiring positioning, relationships, and measurements. FollowEval evaluates whether AI systems can comprehend and manipulate spatial information, which is particularly useful in applications such as robotics and navigation tools.

5. Constraint Adherence:

AI systems often work under specific rules and constraints, such as formatting requirements or character limits. FollowEval tests whether a model can strictly follow predefined conditions, ensuring reliability and compliance in high-stakes environments like legal or medical fields.

A Holistic Approach to AI Evaluation

By covering these dimensions, FollowEval offers a holistic evaluation framework that mirrors the complexity of real-world scenarios. Its ability to measure performance across multiple domains makes it an ideal tool for developers looking to fine-tune AI systems for practical applications.

Whether it’s enhancing customer support chatbots, automated data processors, or AI-driven virtual assistants, FollowEval ensures models are not just functional but also responsive, logical, and context-aware.

Furthermore, the open-source availability of FollowEval GitHub encourages collaboration and innovation within the AI community. Developers can contribute to its growth by adding new tests, improving existing cases, and refining evaluation criteria.

In summary, FollowEval is a powerful benchmarking tool that plays a critical role in advancing AI development by providing developers with the resources they need to evaluate, test, and improve AI capabilities effectively.

Key Features of FollowEval GitHub

FollowEval GitHub is a robust and versatile tool designed to evaluate the instruction-following capabilities of large language models (LLMs). Its diverse features make it a preferred choice for AI researchers, developers, and organizations aiming to assess and enhance their AI systems. Below is a detailed explanation of its key features, emphasizing its practical benefits and technical strengths.

1. Multi-Dimensional Testing

One of the standout features of FollowEval GitHub is its ability to conduct multi-dimensional testing. Unlike traditional evaluation tools that assess a limited set of parameters, FollowEval takes a broader approach.

- It evaluates multiple dimensions, such as string manipulation, logical reasoning, commonsense understanding, spatial awareness, and response constraints.

- This approach provides a holistic view of a model’s strengths and weaknesses, enabling developers to identify specific areas that require improvement.

- Testing across multiple dimensions also simulates real-world scenarios, ensuring that models are prepared to handle complex and layered instructions effectively.

By analyzing each dimension in detail, FollowEval GitHub ensures comprehensive testing that reflects how well a model can follow commands in practical applications.

2. Complex Test Cases

FollowEval GitHub includes complex test cases that challenge AI models to go beyond simple responses and tackle tasks requiring multi-step reasoning.

- The test cases are designed to replicate real-life challenges, pushing models to process and execute instructions step-by-step.

- These tests cover a variety of tasks, including logical puzzles, contextual reasoning, and hierarchical operations.

- Developers can assess how well a model performs tasks requiring layered thinking or combining multiple pieces of information to produce accurate outputs.

For example, a task may require an AI model to rearrange data, extract specific details, and present results in a specific format—all in one query. This level of testing reflects real-world applications where AI needs to perform complex operations rather than just answering simple questions.

3. Human Benchmarks

Benchmarking against human performance is a key strength of FollowEval GitHub. This feature provides a reference point, allowing developers to understand how AI compares to human-level reasoning and accuracy.

- The benchmark tests measure metrics such as accuracy, efficiency, and response relevance.

- AI outputs are compared to those generated by humans performing the same tasks, enabling a direct performance evaluation.

- This approach helps developers identify gaps where AI systems may fall short of human intelligence, guiding further improvements.

FollowEval GitHub also includes tools for visualizing performance trends over time, helping teams track progress as they refine their models.

4. Open-Source Flexibility

As an open-source tool, FollowEval GitHub offers exceptional flexibility for customization and collaboration.

- Developers can fork the repository, make enhancements, and share improvements with the community.

- The open-source nature encourages contributions from AI enthusiasts, ensuring continuous updates and feature expansions.

- Users can modify test cases to address specific evaluation needs, tailoring the framework to suit different models and applications.

By leveraging GitHub’s collaborative features, FollowEval fosters a global developer community that collectively works to enhance AI evaluation techniques.

5. Scalability

FollowEval GitHub is designed with scalability in mind, making it suitable for evaluating models of all sizes—from small prototypes to enterprise-level AI systems.

- Developers can test multiple AI models simultaneously, making it ideal for comparing performance across different architectures.

- It supports cloud-based deployment, enabling teams to run large-scale tests without requiring extensive local resources.

- Scalability also ensures that businesses can test models for specific use cases, whether they are building chatbots, virtual assistants, or complex automation systems.

This flexibility is particularly beneficial for organizations looking to scale AI adoption without compromising evaluation quality.

6. Comprehensive Documentation

Another feature that makes FollowEval GitHub user-friendly is its comprehensive documentation.

- The repository includes detailed instructions for installation, configuration, and testing, making it accessible even for those with minimal experience in AI evaluation.

- Examples and tutorials guide users through setup and execution, reducing the learning curve.

- Developers can also access troubleshooting tips and FAQs, ensuring a smooth onboarding process.

The documentation includes sample commands, test scripts, and explanations of evaluation metrics, making it a one-stop resource for both beginners and experienced AI researchers.

7. Support for Customization

FollowEval GitHub is highly customizable, enabling users to adapt it to their specific requirements.

- Developers can create custom datasets and integrate them into test cases.

- Scripts can be modified to test unique scenarios or evaluate niche capabilities.

- Customization options make it easier to align FollowEval with specific research goals or industry standards.

This adaptability ensures that FollowEval remains a versatile tool, suitable for evaluating AI systems in diverse applications.

8. Integration with Other Tools

FollowEval GitHub supports integration with popular machine learning libraries and tools, including PyTorch, TensorFlow, and HuggingFace Transformers.

- These integrations simplify the process of connecting AI models to the evaluation framework.

- Developers can run tests directly within their existing pipelines, saving time and effort.

- Integration with visualization tools allows for data-driven insights, enabling teams to identify performance trends and areas for improvement quickly.

9. Real-World Applications

The test cases and evaluation metrics in FollowEval GitHub are inspired by real-world applications.

- It evaluates models for tasks such as customer support automation, data analysis, and document processing.

- By simulating practical scenarios, FollowEval GitHub ensures that AI models are not only accurate but also useful in solving everyday challenges.

Setting Up FollowEval from GitHub

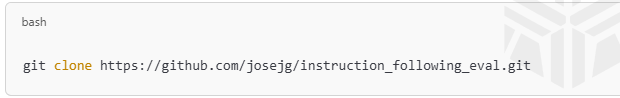

Step 1: Clone the Repository

Download the FollowEval project from GitHub:

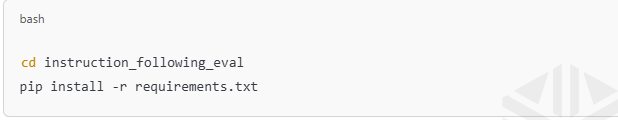

Step 2: Install Dependencies

Navigate to the project directory and install required dependencies:

Step 3: Configure the Environment

Follow instructions in the README.md file to set up environment variables.

Step 4: Verify Installation

Run a sample test to confirm successful setup:

Running Evaluations with FollowEval

Step 1: Prepare the Model

Ensure the model you want to evaluate is accessible and configured properly.

Step 2: Load Test Scripts

Use predefined test cases from the repository or create custom scripts.

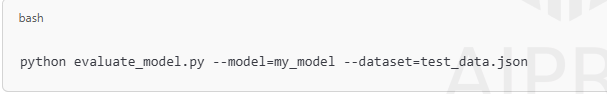

Step 3: Execute the Tests

Run tests by specifying model inputs and parameters:

Step 4: Analyze Outputs

Collect and review evaluation metrics generated by the test scripts.

Interpreting Evaluation Results

FollowEval GitHub generates results based on:

- Accuracy Scores: Percentage of tasks completed successfully.

- Error Analysis: Identifies patterns and failure points.

- Comparisons: Benchmarks performance against human results.

Developers can use these insights to refine and improve model performance.

Use Cases of FollowEval GitHub

- AI Research and Development:

Evaluate and improve LLM instruction-following abilities. - Virtual Assistants:

Test models used in virtual assistants like Siri and Alexa. - Content Generation Tools:

Assess AI tools designed for writing, summarizing, and answering questions. - Academic Studies:

Analyze model behavior in controlled experiments. - Product Development:

Enhance AI-powered chatbots and automation systems.

Also read about Amazing Geometry Dash GitHub 5 Tools

Contributing to FollowEval on GitHub

FollowEval GitHub is an open-source project, welcoming contributions.

Steps to Contribute:

- Fork the Repository:

Create a copy on your account. - Make Changes:

Modify code to add features or fix bugs. - Submit Pull Requests:

Propose updates for review and approval. - Engage in Discussions:

Join GitHub discussions to share ideas and get feedback.

Best Practices for Using FollowEval

- Regular Updates: Keep your repository synced with the latest changes.

- Backup Data: Save results before making modifications.

- Documentation: Follow instructions in the README file carefully.

- Testing: Verify configurations in smaller tests before scaling.

FAQs About FollowEval GitHub

Q1: What is FollowEval GitHub used for?

A: It evaluates the instruction-following capabilities of AI systems.

Q2: Is FollowEval free to use?

A: Yes, it is open-source and freely available on GitHub.

Q3: Can I use FollowEval with custom datasets?

A: Absolutely. It supports custom datasets for personalized evaluations.

Q4: Does FollowEval require programming skills?

A: Basic Python knowledge is recommended for setup and execution.

Q5: How do I report issues or bugs?

A: Submit bug reports on the Issues tab in the GitHub repository.

Conclusion

FollowEval GitHub is a game-changer for evaluating and improving LLMs’ instruction-following capabilities. With its multi-dimensional testing framework and open-source flexibility, it is an essential tool for developers, researchers, and AI enthusiasts.

Whether you’re working on AI assistants, chatbots, or advanced automation systems, FollowEval GitHub provides the insights needed to create more reliable and effective AI solutions. Start exploring its features today and contribute to the future of AI development!